The Privacy Lab is led by Prof. Apu Kapadia in the Luddy School of Informatics, Computing, and Engineering at Indiana University. Our goal is to advance research in computer security and privacy through a human centric approach. For an overview of our research, see our recent publications. Visit the People page to see the faces behind our research.

Current happenings at our lab

Roundup of A Productive Day 2020!

August 15, 2020

- ASSETS paper on the shared privacy considerations of the visually impaired and bystanders in the context of camera based assistive technologies

- CSCW paper on the concept of tangible privacy, to design IoT devices for better privacy assurances.

- USENIX Security paper on the privacy concerns of the visually impaired with camera based assistive applications supported by human volunteers. Watch the 12-min video.

- Oakland paper on our paradoxical result on how photo sharing can increase if you tell people to think about the photo subjects’ privacy. Watch the 17-min video.

- Oakland paper on how to automatically detect subjects and bystanders in photos by mimicking human reasoning. Watch the 1-min or 16-min videos.

- ConPro paper on how often people do (or don’t) change passwords after widely publicized breaches. Watch the 15-min video.

- CSCW paper on how people sanction each other on social media for privacy violations.

- ACM TOCHI article on privacy norms and preferences for photos shared online.

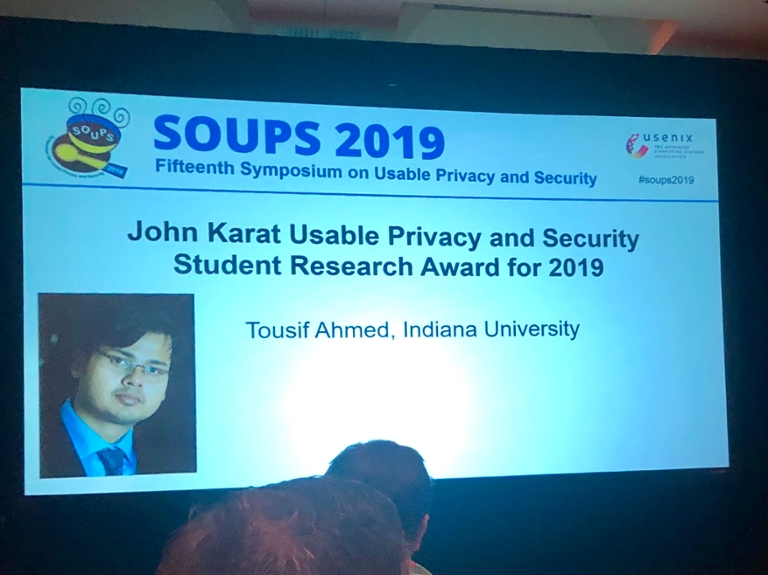

Tousif Ahmed has received the prestigious 2019 John Karat Usable Privacy and Security Student Research Award for his work on privacy for people with visual impairments! The Karat award was made at the opening of SOUPS and we are all proud of Tousif! Read more about it in our school’s article.

Here are three selected articles related to his dissertation:

- UbiComp ’18: Up to a Limit? Privacy Concerns of Bystanders and Their Willingness to Share Additional Information with Visually Impaired Users of Assistive Technologies

- SOUPS ’16: Addressing Physical Safety, Security, and Privacy for People with Visual Impairments

- CHI ’15: Privacy Concerns and Behaviors of People with Visual Impairments

August 19, 2019

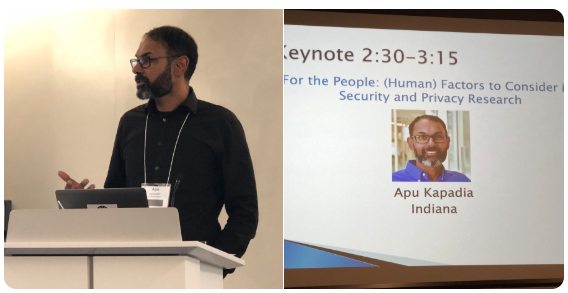

Apu Kapadia gave the keynote talk at the Midwest Security Workshop (2019) titled “For the People: (Human) Factors to Consider in Security and Privacy Research.”

Talk Abstract: In this talk, I will make a case for considering human factors in your research. Although not all of us may ‘need’ to run user studies, your research could benefit from a deeper understanding of what to build, what not to build, why to build it, and how people might benefit from your inventions. Many of you may be reticent to enter this space of ‘usable security’ – I hope to demystify some of the methods by weaving in a narrative of my explorations on privacy in the context of wearable cameras. It is my hope this talk will inspire some of you to factor in the human because, in the end, we are securing systems for the people, attacked by the people.

August 19, 2019

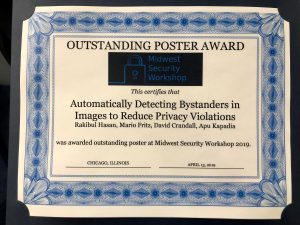

Rakib Hasan won the Best Poster Award at the Midwest Security Workshop (2019) for his work on “Automatically Detecting Bystanders in Images to Reduce Privacy Violations”!

January 11, 2019

Pervasive photo sharing in online social media platforms can cause unintended privacy violations when elements of an image reveal sensitive information. Prior studies have identified image obfuscation methods (e.g., blurring) to enhance privacy, but many of these methods adversely affect viewers’ satisfaction with the photo, which may cause people to avoid using them. We study the novel hypothesis that it may be possible to restore viewers’ satisfaction by ‘boosting’ or enhancing the aesthetics of an obscured image, thereby compensating for the negative effects of a privacy transform. Using a between-subjects online experiment, we studied the effects of three artistic transformations on images that had objects obscured using three popular obfuscation methods validated by prior research. Our findings suggest that using artistic transformations can mitigate some negative effects of obfuscation methods, but more exploration is needed to retain viewer satisfaction.

Read more about it in our CHI 2019 paper.

September 11, 2018

High-fidelity, and often privacy-invasive, sensors are now becoming pervasive in our everyday environments. At home, digital assistants can constantly listen for instructions and security cameras can be on the lookout for unusual activity. Whereas once an individual’s physical actions, in their own home, were private, now networked cameras and microphones can give rise to electronic privacy concerns for one’s physical behaviors. Casual conversations and encounters, once thought to be private and ephemeral, now may be captured and disseminated or archived digitally. While these sensing devices benefit the users in many different ways, hence their popularity, their users may face serious privacy violations. A major problem with current sensing devices is that it is oftentimes unclear whether an audio or video sensor is, indeed, off upon visual inspection by occupants of a space. For example, sensors that have been hacked may indeed record people without their consent, even when their interfaces (e.g., small indicator lights) claim that they are off. The goal of this project is to explore privacy-enhanced sensor designs that provide people with the knowledge and assurance of when they are being recorded and what data is being captured and disseminated. Whereas purely software mechanisms may not inspire trust, physical mechanisms (e.g., a camera’s physical lens cap) can provide a more tangible privacy guarantee to people. This project explores novel, physical designs of sensors that convey a clear and definite sense of assurance to people about their physical privacy.

Through a collaboration with University of Pittsburgh professors Rosta Farzan and Adam J. Lee, this project brings together expertise in computer security and privacy, access control, human computer interaction, and social computing. Through this interdisciplinary team, the project makes socio-technical contributions to both theory and practice by: (1) understanding the privacy concerns, needs, and behaviors of people in the face of increased sensing in physical environments; (2) exploring the design space for hardware sensing platforms to convey meaningful (‘tangible’) assurances of privacy to people by their physical appearance and function; (3) exploring visual indicators of what information is being sent over the network; and (4) exploring alternative sensor designs that trade off sensing fidelity for higher privacy. Together these designs combine hardware and software techniques to tangibly and visually convey a sense of privacy to people impacted by the sensors.

August 30, 2018

With the rise of digital photography and social networking, people are capturing and sharing photos on social media at an unprecedented rate. Such sharing may lead to privacy concerns for the people captured in such photos, e.g., in the context of embarrassing photos that go “viral” and are shared widely. At worst, online photo sharing can result in cyber-bullying that can greatly affect the subjects of such photos. This research builds on the observation that viewers of a photo are mindful of the privacy of other people, and could be influenced to protect their privacy when sharing photos online. First, the research will study how people think and feel about sharing photos of themselves and others. This will involve measuring their behavioral and physiological responses as they make their decisions. Second, the research will identify to what degree these decisions can be altered through technical mechanisms that are designed to encourage responsible image sharing activity that respects the privacy of people captured in the photo. The investigators will involve graduate and undergraduates students in this research.

This project brings together expertise in the psychological and brain sciences (Bennett Bertenthal) and computer security and privacy (Apu Kapadia) to explore socio-technical solutions for privacy in the context of photo sharing. In particular, the research focuses on first developing an understanding of people’s cognitive and affective dynamics while sharing photos on social media. The research seeks to 1) determine the effects of attention, depth of processing, and decisional uncertainty on image sharing decisions; and 2) identify the relationship between affective responses to images and decisions to share images on social media. Building on the knowledge gained from these experiments, the research seeks to develop and test a series of socio-technical intervention strategies such as face-highlighting and identity-priming, which are informed by a novel theoretical and methodological framework called objectification theory, or the idea that people are often motivated to see other people in terms of particular features or as objects of entertainment. These interventions will counteract objectification by encouraging viewers to consider the personal identity or privacy of the people depicted in each image before making image-sharing decisions. Thus, the mechanisms for addressing bystander privacy will be grounded in a psychological study that understands and manipulates the elements of decision making while sharing photos.

August 30, 2018

The emergence of augmented reality and computer vision based tools offer new opportunities to visually impaired persons (VIPs). Solutions that help VIPs in social interactions by providing information (age, gender, attire, expressions etc.) about people in the vicinity are becoming available. Although such assistive technologies are already collecting and sharing such information with VIPs, the views, perceptions, and preferences of sighted bystanders about such information sharing remain unexplored. Although bystanders may be willing to share more information for assistive uses it remains to be explored to what degree bystanders are willing to share various kinds of information and what might encourage additional sharing of information based on the contextual needs of VIPs. We describe the first empirical study of information sharing preferences of sighted bystanders of assistive devices. We conducted a survey based study using a contextual method of inquiry with 62 participants followed by nine semi-structured interviews to shed more insight on our key quantitative findings. We find that bystanders are more willing to share some kinds of personal information with VIPs and are willing to share additional information if higher security assurances can be made by improving their control over how their information is shared.

Read about it in our UbiComp 2018 paper.

August 30, 2018

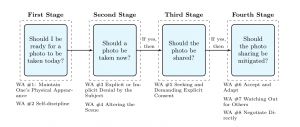

Pervasive photography and the sharing of photos on social media pose a significant challenge to undergraduates’ ability to manage their privacy. Drawing from an interview-based study, we find undergraduates feel a heightened state of being surveilled by their peers and rely on innovative workarounds – negotiating the terms and ways in which they will and will not be recorded by technology-wielding others – to address these challenges. We present our findings through an experience model of the life span of a photo, including an analysis of college students’ workarounds to deal with the technological challenges they encounter as they manage potential threats to privacy at each of our proposed four stages. We further propose a set of design directions that address our users’ current workarounds at each stage. We argue for a holistic perspective on privacy management that considers workarounds across all these stages. In particular, designs for privacy need to more equitably distribute the technical power of determining what happens with and to a photo among all the stakeholders of the photo, including subjects and bystanders, rather than the photographer alone.

Read more in our SOUPS 2018 paper.

March 1, 2018

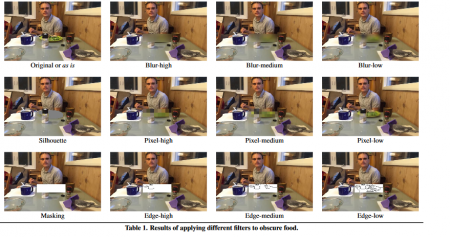

With the rise of digital photography and social networking, people are sharing personal photos online at an unprecedented rate. In addition to their main subject matter, photographs often capture various incidental information that could harm people’s privacy. In our paper, ‘Viewer Experience of Obscuring Scene Elements in Photos to Enhance Privacy’, we explore methods to keep this sensitive information private while preserving image utility. While common image filters, such as blurring, may help obscure private content, they affect the utility and aesthetics of the photos, which is important since the photos are mainly shared on social media for human consumption. Existing research on privacy-enhancing image filters predominately focus on obscuring faces or lack a systematic study of how filters affect image utility. To understand the trade-offs when obscuring various sensitive aspects of images, we study eleven filters applied to obfuscate twenty different objects and attributes, and evaluate how effectively they protect privacy and preserve image utility and aesthetics for human viewers.

Read more in our CHI ’18 paper.