October 12, 2017

A critical element for a successful transition is the ability to disclose, or make known, one’s struggles. In our paper ‘Challenges in Transitioning from Civil to Military Culture: Hyper-Selective Disclosure through ICTs’ we explore the transition disclosure practices of Reserve Officers’ Training Corps (ROTC) students who are transitioning from an individualistic culture to one that is highly collective. As ROTC students routinely evaluate their peers through a ranking system, the act of disclosure may impact a student’s ability to secure limited opportunities within the military upon graduation. We perform interviews of 14 ROTC students studying how they use information communication technologies (ICTs) to disclose their struggles in a hyper-competitive environment, we find they engage in a process of highly selective disclosure, choosing different groups with which to disclose based on the types of issues they face. We share implications for designing ICTs that better facilitate how ROTC students cope with personal challenges during their formative transition into the military.

Read more in our CSCW 2018 paper.

October 12, 2017

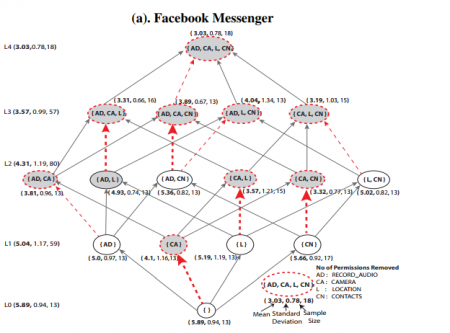

Millions of apps available to smartphone owners request various permissions to resources on the devices including sensitive data such as location and contact information. Disabling permissions for sensitive resources could improve privacy but can also impact the usability of apps in ways users may not be able to predict. In our paper ‘To Permit or Not to Permit, That is the Usability Question: Crowdsourcing Mobile Apps’ Privacy Permissions Settings’ we study an efficient approach that ascertains the impact of disabling permissions on the usability of apps through large-scale, crowdsourced user testing with the ultimate goal of making recommendations to users about which permissions can be disabled for improved privacy without sacrificing usability.

We replicate and significantly extend previous analysis that showed the promise of a crowdsourcing approach where crowd workers test and report back on various configurations of an app. Through a large, between-subjects user experiment, our work provides insight into the impact of removing permissions within and across different apps. We had 218 users test Facebook Messenger, 227 test Instagram, and 110 test Twitter. We study the impact of removing various permissions within and across apps, and we discover that it is possible to increase user privacy by disabling app permissions while also maintaining app usability.

Paper ‘Cartooning for Enhanced Privacy in Lifelogging and Streaming Videos’ Presented at CV-COPS ’17

October 12, 2017

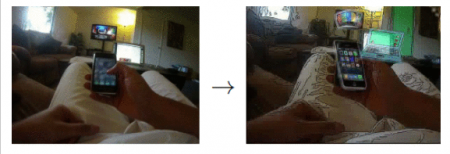

In the paper ‘Cartooning for Enhanced Privacy in Lifelogging and Streaming Videos’, we describe an object replacement approach whereby privacy-sensitive objects in videos are replaced by abstract cartoons taken from clip art. We used a combination of computer vision, deep learning, and image processing techniques to detect objects, abstract details, and replace them with cartoon clip art. We conducted a user study with 85 users to discern the utility and effectiveness of our cartoon replacement technique. The results suggest that our object replacement approach preserves a video’s semantic content while improving its piracy by obscuring details of objects.

October 12, 2017

Major online messaging services such as Facebook Messenger and WhatsApp are starting to provide users with real-time information about when precipitants read their messages. This useful feature has the potential to negatively impact privacy as well as cause concern over access to self. In the paper ‘Was My Message Read?: Privacy and Signaling on Facebook Messenger’ we surveyed 402 senders and 316 recipients on Mechanical Turk. We looked at senders’ use of and reactions to the ‘message seen’ feature, and recipients privacy and signaling behaviors in the face of such visibility. Our findings indicate that senders experience a range of emotions when their message is not read, or is read but not answered immediately. Recipients also engage in various signaling behaviors in the face of visibility by both replying or not replying immediately.

October 12, 2017

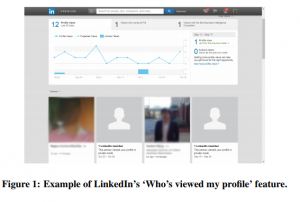

Social networking sites are starting to offer users services that provide information about their composition and behavior. LinkedIn’s ‘Who viewed my profile’ feature is an example. Providing information about content viewers to content publishers raises new privacy concerns for viewers of social networking sites. In the paper ‘Viewing the Viewers: Publishers’ Desires and Viewers’ Privacy Concerns in Social Networks we report on 718 Mechanical Turk respondents. 402 were surveyed on publishers’ use and expectations of information about their viewers, and 316 were surveyed about privacy behaviors and concerns in the face of such visibility. In some instances, such as dating sites, gender differences exist about what information respondents felt should be shared with publishers and required of viewers.

October 12, 2017

Various assistive devices are able to give greater independence to people with visual impairments both online and offline. Significant work remains to understand and address their safety, security, and privacy concerns, especially in the physical, offline world. People with visual impairments are particularly vulnerable to physical assault and theft, shoulder-surfing attacks, and being overheard during private conversations. In the paper ‘Understanding the Physical Safety, Security, and Privacy Concerns of People withe Visual Impairments’ we conduct two sets of interviews to find out how people with visual impairments manage these concerns and how assistive technologies can help. The paper also proposes design considerations for camera-based devices that would help people with visual impairments monitor for potential threats around them.

October 12, 2017

People with visual impairments face numerous obstacles in their daily lives. Due to these obstacles, people with visual impairments face a variety of physical privacy concerns. Researchers have recently studied how emerging technologies, such as wearable devices, can help these concerns. In the paper ‘Addressing Physical Safety, Security, and Privacy for People with Visual Impairments’ we conduct 19 interviews with participants who have visual impairments in the greater San Francisco metropolitan area. Our participants’ detailed accounts illuminated three topics related to physical privacy. The first is the safety and security concerns of people with visual impairments in urban environments, such as feared and actual instances of assault. The second being their behaviors and strategies for protecting physical safety. The last being refined design considerations for future wearable devices that could enhance their awareness of surrounding threats.

October 12, 2017

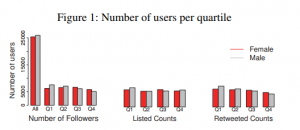

Social media gives the potential for people to freely communicate regardless of their status. In practice, social categories like gender may still bias online communication, replicating offline disparities. In the paper Twitter’s Glass Ceiling: The Effect of Perceived Gender on Online Visibility we study over 94,000 Twitter users to investigate the association between perceived gender and measures of online visibility. We find that users perceived as female experience a ‘glass ceiling’, similar to the barrier women face in attaining higher positions in companies. Being perceived as female is associated with more visibility for users in lower quartiles of visibility, but the opposite is true for the most visible users where being perceived male is strongly associated with more visibility. Our analysis suggest that gender presented in social media profiles likely frame interactions as well as perpetuates old inequalities online.

February 10, 2016

Our work on detecting computer monitors within photos has been accepted to ACM CHI 2016 and has received an Honorable Mention Award (Top 4% of submissions).

Low-cost, lightweight wearable cameras let us record (or ‘lifelog’) our lives from a ‘first-person’ perspective for pur- poses ranging from fun to therapy. But they also capture private information that people may not want to be recorded, especially if images are stored in the cloud or visible to other people. For example, recent studies suggest that computer screens may be lifeloggers’ single greatest privacy concern, because many people spend a considerable amount of time in front of devices that display private information. In this paper, we investigate using computer vision to automatically detect computer screens in photo lifelogs. We evaluate our approach on an existing in-situ dataset of 36 people who wore cameras for a week, and show that our technique could help manage privacy in the upcoming era of wearable cameras.

Read more about it at our Project Page. Here is our paper and the extended technical report

February 16, 2015

The IU Privacy Lab led by PI Apu Kapadia has four papers accepted at CHI 2015! The first paper titled Privacy Concerns and Behaviors of People with Visual Impairments is a qualitative study that reports on interviews with 14 visually impaired people and suggests new directions for improving the privacy of the visually impaired using wearable technologies. The second paper titled Crowdsourced Exploration of Security Configurations explores the use of crowdsourcing to efficiently determine restricted sets of permissions that can strike reasonable tradeoffs between privacy and usability for smartphone apps.

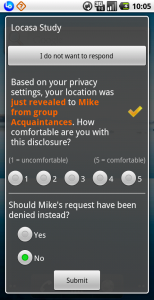

The third paper (Note) titled Sensitive Lifelogs: A Privacy Analysis of Photos from Wearable Cameras is a followup study to our UbiComp 2014 paper titled Privacy Behaviors of Lifeloggers using Wearable Cameras. For this Note we analyzed the photos collected in our lifelogging study, seeking to understand what makes a photo private and what we can learn about privacy in this new and very different context where photos are captured automatically by one’s wearable camera. The fourth paper (Note) titled Interrupt Now or Inform Later?: Comparing Immediate and Delayed Privacy Feedback follows up on our CHI 2014 paper titled Reflection or Action?: How Feedback and Control Affect Location Sharing Decisions. This Note explored the effect of providing immediate vs. delayed privacy feedback (e.g., for location accesses). We found that the sense of privacy violation was heightened when feedback was immediate, but not actionable, and has implications on how and when privacy feedback should be provided.

August 28, 2014

PIs Apu Kapadia and David Crandall at IU, and Denise Anthony at Dartmouth College, have received a $1.2M collaborative NSF award (IU Share: $800K) to study privacy in the context of wearable cameras over the next four years. The ubiquity of cameras, both traditional and wearable, will soon create a new era of visual sensing applications, raising significant implications for individuals and society, both beneficial and hazardous. This research couples a sociological understanding of privacy with an investigation of technical mechanisms to address these needs. Issues such as context (e.g., capturing images for public use may be okay at a public event, but not in the home) and content (are individuals recognizable?) will be explored both on technical and sociological fronts: What can we determine about images, what does this mean in terms of privacy risk, and how can systems protect against risk to privacy?

Read more about this grant, and our project.

August 26, 2014

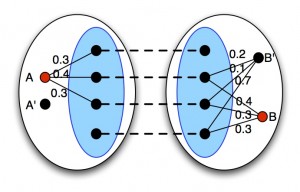

Researchers have shown how ‘network alignment’ techniques can be used to map nodes from a reference graph into an anonymized social-network graph. These algorithms, however, are often sensitive to larger network sizes, the number of seeds, and noise~— which may be added to preserve privacy. We propose a divide-and-conquer approach to strengthen the power of such algorithms. Our approach partitions the networks into ‘communities’ and performs a two-stage mapping: first at the community level, and then for the entire network. Through extensive simulation on real-world social network datasets, we show how such community-aware network alignment improves de-anonymization performance under high levels of noise, large network sizes, and a low number of seeds. Read more in our paper, which will be presented at ACM CCS 2014.

July 2, 2014

A number of wearable ‘lifelogging’ camera devices have been released recently, allowing consumers to capture images and other sensor data continuously from a first-person perspective. While lifelogging cameras are growing in popularity, little is known about privacy perceptions of these devices or what kinds of privacy challenges they are likely to create. To explore how people manage privacy in the context of lifelogging cameras, as well as which kinds of first-person images people consider ‘sensitive,’ we conducted an in situ user study (N = 36) in which participants wore a lifelogging device for a week. Our findings indicate that: 1) some people may prefer to manage privacy through in situ physical control of image collection in order to avoid later burdensome review of all collected images; 2) a combination of factors including time, location, and the objects and people appearing in the photo determines its ‘sensitivity;’ and 3) people are concerned about the privacy of bystanders, despite reporting almost no opposition or concerns expressed by bystanders over the course of the study. Read our paper or visit our project page.

March 8, 2014

PIs Kapadia and Crandall have received a 2014 Google Research Award for their research on privacy in the context of ‘lifelogging’ wearable cameras. We expect that these wearable cameras (see the Narrative Clip and the Autographer in addition to Google Glass) will become commonplace within the next few years, regularly capturing photos to record a first-person perspective of the wearer’s life. The goal of this project is to investigate and build automatic algorithms to organize images from lifelogging cameras, using a combination of computer vision and analysis of sensor data (like GPS, WiFi, accelerometers, etc.), thus empowering users to efficiently manage and share these images in a way that protects their privacy. As a first step, we proposed PlaceAvoider, an approach for recognizing (and avoiding) sensitive spaces within images. Read an article about this work by the MIT Technology Review. Read more about our project here.

January 26, 2014

Owing to the ever-expanding size of social and professional networks, it is becoming cumbersome for individuals to configure information disclosure settings. We used location sharing systems to unpack the nature of discrepancies between a person’s disclosure settings and contextual choices. We conducted an experience sampling study (N = 35) to examine various factors contributing to such divergence. We found that immediate feedback about disclosures without any ability to control the disclosures evoked feelings of oversharing. Moreover, deviation from specified settings did not always signal privacy violation; it was just as likely that settings prevented information disclosure considered permissible in situ. We suggest making feedback more actionable or delaying it sufficiently to avoid a knee-jerk reaction. Our findings also make the case for proactive techniques for detecting potential mismatches and recommending adjustments to disclosure settings, as well as selective control when sharing location with socially distant recipients and visiting atypical locations.

Our paper will appear at CHI 2014. Read more about our Exposure project.

December 5, 2013

A new generation of wearable devices (such as Google Glass and the Narrative Clip) will soon make ‘first-person’ cameras nearly ubiquitous, capturing vast amounts of imagery without deliberate human action. ‘Lifelogging’ devices and applications will record and share images from people’s daily lives with their social networks.

We introduce PlaceAvoider, a technique for owners of first-person cameras to ‘blacklist’ sensitive spaces (like bathrooms and bedrooms). PlaceAvoider recognizes images captured in these spaces and flags them for review before the images are made available to applications. PlaceAvoider performs novel image analysis using both fine-grained image features (like specific objects) and coarse-grained, scene-level features (like colors and textures) to classify where a photo was taken.

Our paper will appear at NDSS 2014. Read more about our ‘Vision for Privacy‘ project.

July 10, 2013

Indiana University hosted the interactive and thought-provoking PETools workshop, chaired by Prof. Apu Kapadia and held in conjunction with PETS 2013. The goal of this workshop was to discuss the design of privacy tools aimed at real-world deployments. This workshop brought together privacy practitioners and researchers with the aim to spark dialog and collaboration between these communities. We thank the authors and attendees for a successful workshop! Please check out the program (with links to the abstracts)!

April 9, 2013

Prof. Apu Kapadia’s award from the National Science Foundation (NSF) is titled CAREER: Sensible Privacy: Pragmatic Privacy Controls in an Era of Sensor-Enabled Computing. From the press release: Kapadia will receive $550,887 over the next five years to advance his work in security and privacy in pervasive and mobile computing. Kapadia’s grant will allow him to pursue development of reactive privacy mechanisms that he said could have a profound and positive societal impact by not only helping people control their privacy, but also potentially increasing their participation in sensor-enabled computing. “People need only care about the subset of data and usage scenarios that have the potential to violate their privacy, and this reduces the amount of data to which they must regulate access,” he said. “And people make better decisions concerning such access when these decisions are made in a context where they know how their data is being used.”

March 1, 2013

We introduce PlaceRaider, a proof-of-concept mobile malware that exploits a smartphone’s camera and onboard sensors to reconstruct rich, 3D models of the victim’s indoor space using only opportunistically taken photos. Attackers can use these models to engage in remote reconnaissance and virtual theft of the victims’ environment. We substantiate this threat through human subject studies. Our paper was presented at the 20th Annual Network & Distributed System Security Symposium (NDSS) 2013. For more details, see the PlaceRaider project page.

January 10, 2013

Profs. David Crandall and Apu Kapadia have been awarded $50K of seed funding through the Faculty Research Support Program (FRSP) for their project titled Vision for Privacy: Privacy-aware Crowd Sensing using Opportunistic Imagery. A variety of powerful and potentially transformative `visual social sensing’ applications could be created by aggregating together data from cameras and sensors on smartphones and emerging technologies such as augmented reality glasses (e.g., by Google Project Glass). These applications, however, raise major privacy concerns because of the large amount of potentially private data that could be captured. This project investigates techniques to provide guarantees on privacy in the context of such applications. For more details, see our project page.

December 13, 2012

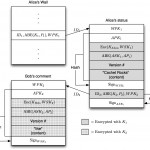

We propose Cachet, a peer-to-peer social-network architecture that provides strong security and privacy guarantees. We leverage cryptographic techniques to protect the confidentiality of data, and design a hybrid structured-unstructured overlay paradigm where social contacts act as trusted caches to help reduce the cryptographic as well as the communication overhead in the network. We presented our paper on Cachet at the 8th ACM International Conference on Emerging Networking Experiments and Technologies (CoNEXT) 2012. For more details, see the Cachet project page.

October 19, 2012

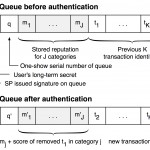

Some users may misbehave under the cover of anonymity by, e.g., defacing webpages on Wikipedia or posting vulgar comments on YouTube. To prevent such abuse, we have explored various anonymous credential schemes to revoke access for misbehaving users while maintaining their anonymity. Our latest scheme, PERM, supports millions of user sessions and makes ‘reputation-based blacklisting’ practical for large-scale deployments. Our paper on PERM was presented at the 19th ACM Conference on Computer and Communications Security. For more details see the accountable anonymity project page.